On December 8 and 10, 2025, NASA's Perseverance Mars rover made history. The rover traversed 1,496 feet (455.9 meters) of Martian terrain on a route planned entirely by artificial intelligence -- specifically, a specialized iteration of Anthropic's Claude 4.5 vision-language model. Led by NASA's Jet Propulsion Laboratory (JPL) in Southern California, this marked the first time generative AI was used to plan and execute a drive on another planet.

This is not just a milestone for space exploration. It is a case study in deploying AI in one of the most extreme, unforgiving environments imaginable -- and the lessons it offers are relevant to any developer working with autonomous systems, computer vision, or AI reliability.

The Challenge of Driving on Mars

Before understanding what Claude accomplished, you need to appreciate why driving on Mars is so extraordinarily difficult.

The Communication Delay

Mars is between 4 and 24 minutes from Earth by radio signal (depending on orbital positions). That means a round-trip communication takes 8 to 48 minutes. Real-time remote control is impossible. Every drive must be planned in advance and executed autonomously.

The Traditional Process

Historically, Mars rover drives have been planned by human operators at JPL who:

- Receive high-resolution imagery from the rover's navigation cameras and orbital imagery from NASA's Mars Reconnaissance Orbiter (MRO)

- Manually analyze terrain features -- rocks, slopes, sand ripples, bedrock

- Plot a safe path using waypoints

- Upload the drive commands to the rover

- Wait for telemetry to confirm the drive executed successfully

This process is meticulous, time-consuming, and limits how far a rover can travel in a given Martian day (sol). The typical planning cycle can take an entire Earth day for a single drive sequence.

Perseverance's Existing Autonomy

Perseverance already had some autonomous navigation capability through its AutoNav system, which uses onboard cameras and traditional computer vision algorithms to avoid immediate hazards. But AutoNav operates locally -- it can swerve around a rock in its path, but it cannot plan an optimal multi-hundred-meter route across complex terrain.

How Claude Changed the Game

The collaboration between JPL and Anthropic introduced generative AI into the route-planning pipeline for the first time, and the results were striking.

The AI Pipeline

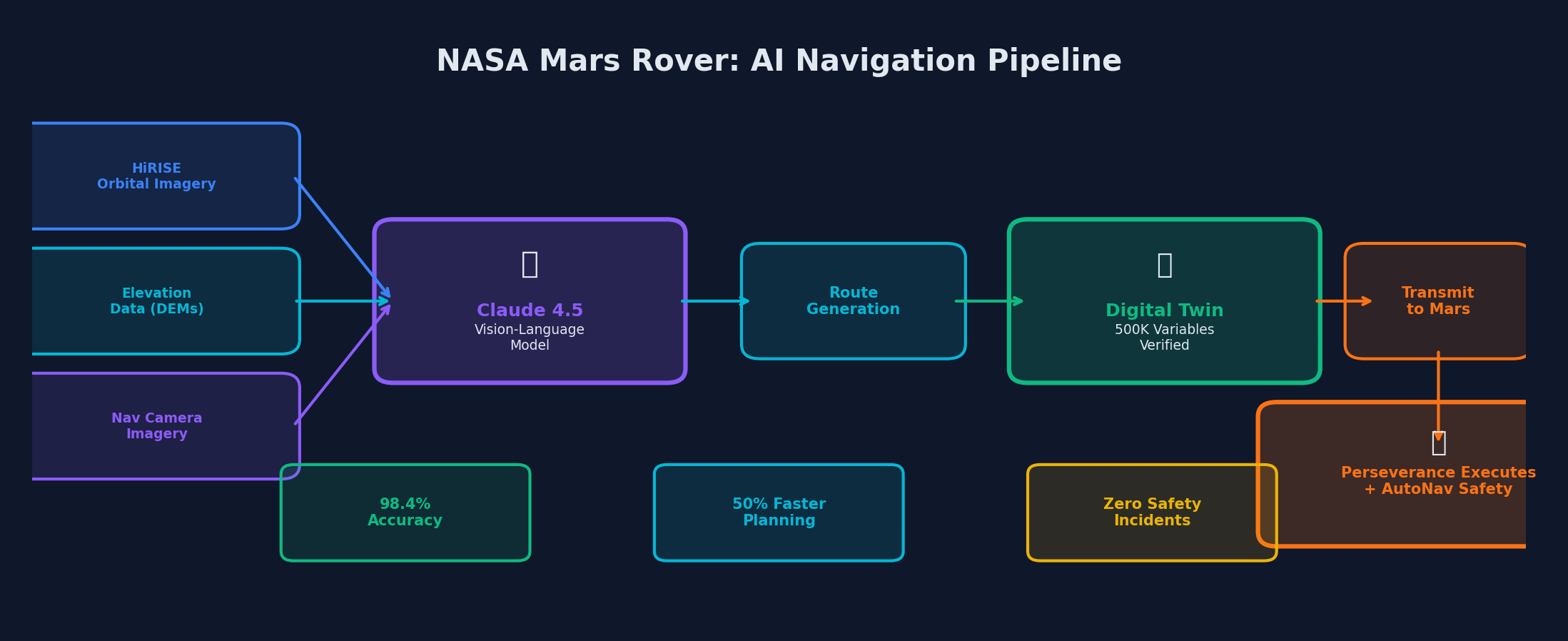

The complete AI navigation pipeline: from orbital imagery through Claude's analysis to rover execution

The complete AI navigation pipeline: from orbital imagery through Claude's analysis to rover execution

Here is how the system works, based on information from JPL and Anthropic:

Step 1: Data Ingestion

The system ingests multiple data sources:

- High-resolution orbital imagery from the HiRISE camera aboard NASA's Mars Reconnaissance Orbiter (25 cm/pixel resolution)

- Digital elevation models (DEMs) providing terrain slope data

- Previously captured navigation camera imagery from Perseverance itself

- Historical traverse data from previous rover drives in the area

Step 2: Vision-Language Model Analysis

Claude 4.5's vision-language capabilities analyze this multimodal dataset to identify critical terrain features:

- Bedrock -- safe, stable driving surfaces

- Outcrops -- potential science targets but navigation obstacles

- Boulder fields -- hazardous terrain requiring avoidance

- Sand ripples -- deceptively dangerous terrain that can trap wheels

- Loose soil -- areas where the rover might slip or sink

The model achieved 98.4% accuracy in hazard identification compared to expert human assessment.

Step 3: Route Generation

Using its analysis, Claude generated a continuous path with precisely defined waypoints. These waypoints were expressed in Rover Markup Language (RML), the command format used by Perseverance's flight software.

Step 4: Digital Twin Verification

Before any commands were sent to Mars, JPL engineers processed Claude's route through their "digital twin" -- a virtual replica of Perseverance and its surrounding terrain. The verification process checked over 500,000 telemetry variables to ensure the AI-generated commands were fully compatible with the rover's flight software and safety constraints.

Step 5: Execution

Once verified, the commands were transmitted to Mars and executed by Perseverance autonomously. The rover's AutoNav system remained active as a safety layer, able to halt the drive if it encountered an unexpected hazard.

The Results

| Metric | Drive 1 (Dec 8) | Drive 2 (Dec 10) | Combined |

|---|---|---|---|

| Distance | 689 ft (210 m) | 807 ft (246 m) | 1,496 ft (456 m) |

| Hazard identification accuracy | 98.4% | 98.4% | 98.4% |

| Planning time reduction | ~50% | ~50% | ~50% |

| Safety incidents | 0 | 0 | 0 |

JPL engineers report that Claude cut the time required for route planning in half. For a mission where every sol counts and science targets are time-sensitive, this efficiency gain is enormously valuable.

Technical Architecture: What Developers Can Learn

The NASA-Anthropic collaboration offers several architectural patterns that are applicable far beyond Mars exploration.

1. Vision-Language Models for Spatial Reasoning

The use of a VLM (Vision-Language Model) for terrain analysis demonstrates a pattern that developers building any geospatial, robotics, or computer vision system should study.

Traditional approach: Separate computer vision model for object detection, separate model for classification, rule-based system for path planning.

VLM approach: A single model that can see the terrain, understand the context (e.g., "this is a sand ripple field that extends 50 meters to the northeast"), and reason about it in natural language.

# Conceptual example: VLM-based terrain analysis

# This illustrates the pattern, not the actual NASA implementation

from anthropic import Anthropic

client = Anthropic()

def analyze_terrain(orbital_image_b64: str, elevation_data: dict) -> dict:

"""

Use a vision-language model to analyze Martian terrain

and identify hazards, safe zones, and optimal routes.

"""

response = client.messages.create(

model="claude-sonnet-4-20250514",

max_tokens=4096,

messages=[{

"role": "user",

"content": [

{

"type": "image",

"source": {

"type": "base64",

"media_type": "image/png",

"data": orbital_image_b64

}

},

{

"type": "text",

"text": f"""Analyze this orbital terrain image for rover

navigation. Elevation data: {elevation_data}

Identify and classify:

1. Safe driving surfaces (bedrock, compacted soil)

2. Hazards (boulder fields, sand ripples, steep slopes)

3. Science targets of interest (outcrops, formations)

For each identified feature, provide:

- Classification

- Approximate coordinates in the image

- Risk level (1-5)

- Recommended action (traverse, avoid, approach cautiously)

"""

}

]

}]

)

return parse_terrain_analysis(response.content[0].text)2. The Digital Twin Verification Pattern

Perhaps the most important engineering lesson from this project is the verification architecture. Claude did not control the rover directly. Its output was verified against a digital twin before any commands reached Mars.

This pattern -- AI generates, simulation verifies, human approves -- is applicable to any safety-critical system:

- Autonomous vehicles

- Medical AI systems

- Industrial automation

- Financial trading systems

# Pattern: AI-generated output with digital twin verification

class SafeAIPlanner:

def __init__(self, ai_model, digital_twin, safety_checker):

self.ai_model = ai_model

self.digital_twin = digital_twin

self.safety_checker = safety_checker

def plan(self, environment_data: dict) -> Plan:

# Step 1: AI generates a plan

ai_plan = self.ai_model.generate_plan(environment_data)

# Step 2: Simulate the plan in the digital twin

simulation = self.digital_twin.simulate(ai_plan)

# Step 3: Check safety constraints

safety_result = self.safety_checker.evaluate(simulation)

if not safety_result.passed:

# AI plan failed safety checks -- log and reject

log_safety_failure(ai_plan, safety_result)

return self.fallback_plan(environment_data)

# Step 4: Return verified plan for human approval

return VerifiedPlan(

plan=ai_plan,

simulation_results=simulation,

safety_report=safety_result,

requires_human_approval=True

)3. Graceful Degradation

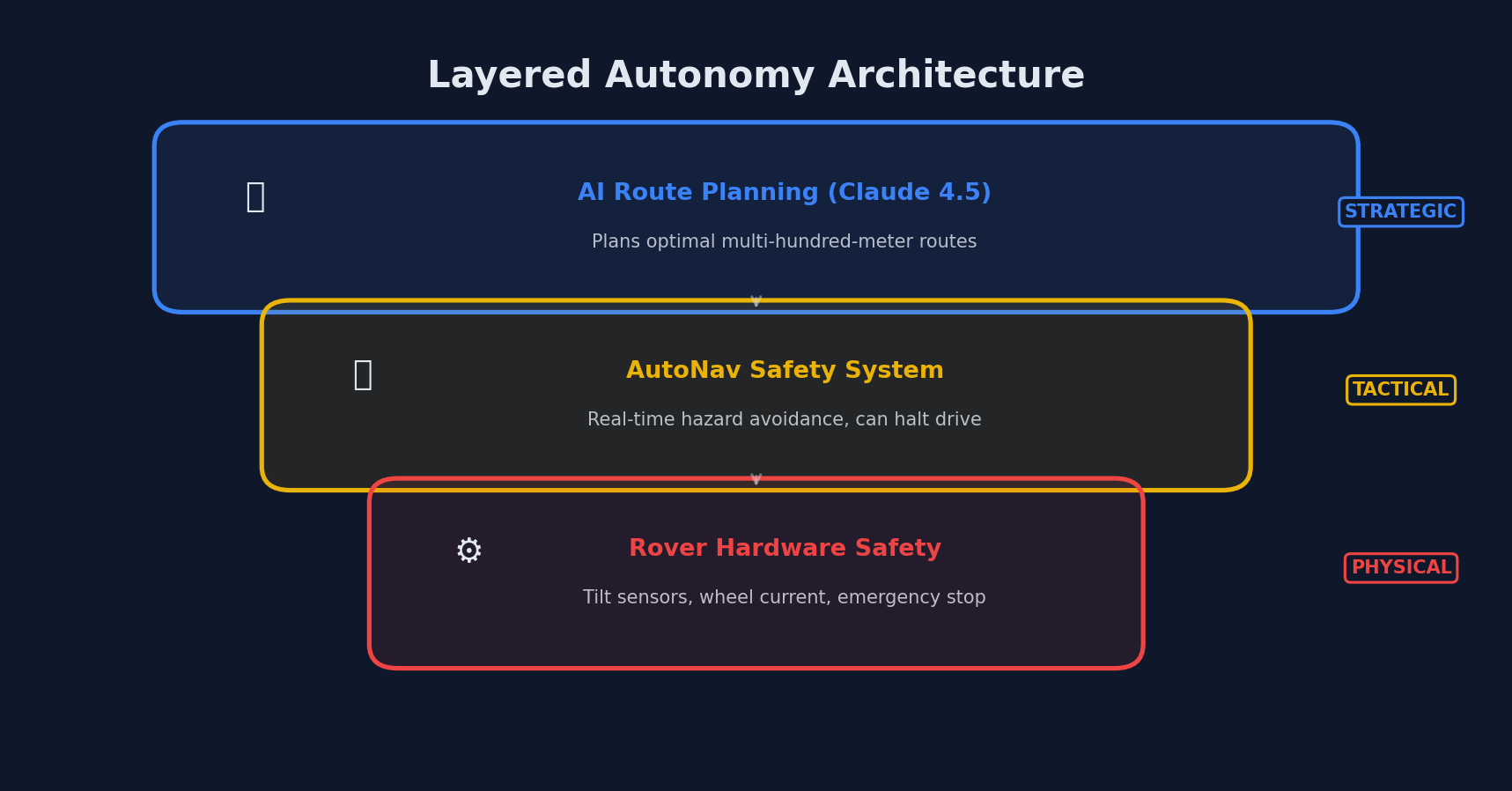

Layered autonomy: AI handles strategy, AutoNav provides tactical safety, hardware enforces physical limits

Layered autonomy: AI handles strategy, AutoNav provides tactical safety, hardware enforces physical limits

Even with Claude planning the route, Perseverance's existing AutoNav system remained active as a safety layer. If the rover encountered a hazard that Claude's planning had not anticipated, AutoNav would halt the drive.

This layered autonomy approach -- where AI-planned behavior can be overridden by a simpler, more conservative safety system -- is a pattern every developer building autonomous systems should implement.

4. Handling Latency and Disconnection

The 4-24 minute communication delay to Mars is an extreme version of a problem many distributed systems face: what do you do when you cannot reach the server?

The solution here was to front-load all intelligence into the planning phase. The rover received a complete, self-contained set of instructions that it could execute autonomously. No real-time AI inference was needed during the drive itself.

This pattern applies to:

- Edge AI deployments with intermittent connectivity

- IoT devices in remote locations

- Mobile applications in low-connectivity environments

- Any system where real-time cloud inference is not guaranteed

Challenges of Deploying AI in Space

The JPL-Anthropic collaboration also highlights unique challenges that push the boundaries of AI deployment:

Validation at Scale

Verifying 500,000 telemetry variables for a single drive sequence represents an enormous validation surface. For developers, this underscores the importance of comprehensive testing for AI-generated outputs, especially in safety-critical domains.

Irreversibility

If the rover gets stuck in a sand trap because of a bad AI-generated route, there is no "undo" button. There is no tow truck on Mars. This is the ultimate test of AI reliability -- and it demands the kind of rigorous verification pipeline that JPL implemented.

Environmental Uncertainty

Mars surface conditions can change due to dust storms, thermal expansion, and other factors. The AI system had to work with data that might be hours, days, or months old. Handling data staleness is a challenge in many Earth-bound AI applications as well.

What Comes Next

Vandi Verma, a space roboticist at JPL, described the future trajectory: "We are moving towards a day where generative AI and other smart tools will help our surface rovers handle kilometer-scale drives while minimizing operator workload."

The implications extend beyond Mars:

- Lunar missions: NASA's Artemis program could use similar AI navigation for lunar rovers

- Outer solar system: Missions to Europa, Titan, and other destinations face even greater communication delays

- Earth applications: The patterns proven on Mars can be applied to terrestrial autonomous systems -- underwater vehicles, drones in remote areas, and disaster-response robots

Practical Takeaways for Developers

Vision-language models are not just chatbots. Claude's ability to analyze orbital imagery and generate navigation commands demonstrates that VLMs can perform complex spatial reasoning tasks. Consider where this capability could apply in your domain.

Never trust, always verify. JPL did not send Claude's output directly to a $2.7 billion rover on another planet. They verified it against a digital twin first. Your AI outputs deserve the same rigor, even if the stakes are lower.

Layer your autonomy. Use AI for high-level planning but keep simpler, more predictable safety systems as fallbacks. The combination is more robust than either approach alone.

Design for disconnection. Build systems that can operate autonomously with pre-computed AI plans, rather than requiring real-time inference for every decision.

Document the pipeline. JPL's meticulous documentation of the AI planning pipeline -- from data ingestion through verification to execution -- is a model for how to deploy AI responsibly in any high-stakes application.

The Perseverance rover's AI-planned drives are more than a technological achievement. They are a proof of concept for a new paradigm in autonomous systems: one where generative AI handles the cognitive heavy lifting of planning, while rigorous engineering processes ensure safety and reliability. That is a pattern worth learning from, whether you are navigating Mars or building the next generation of intelligent applications here on Earth.

Comments