If you are building AI-powered software in the United States in 2026, you are navigating one of the most complex regulatory environments in technology history. Thirty-eight states have passed AI-related legislation. The federal government has signed an executive order asserting the right to preempt state laws. The EU AI Act is fully in force. And no single, comprehensive federal AI law exists.

For developers, this is not an abstract policy discussion. It is a compliance reality that affects how you build, deploy, and document your AI systems. This guide breaks down the current landscape, identifies the laws most likely to impact your work, and provides practical steps to stay compliant.

The Current Federal Landscape

The United States does not have a comprehensive federal AI regulation. What it has instead is a patchwork of executive orders, agency guidance, and sector-specific regulations.

The Trump Administration's AI Policy Framework

On December 11, 2025, President Trump signed Executive Order titled "Ensuring a National Policy Framework for Artificial Intelligence." This order is significant for two reasons:

Pro-innovation stance: It reoriented U.S. AI policy toward promoting innovation, explicitly revoking portions of the 2023 Biden-era executive order that emphasized mandatory safety testing and reporting requirements for frontier AI models.

State preemption signal: The order directs the federal government to review state AI laws deemed "inconsistent" with the national policy framework, raising the possibility of federal preemption of state regulations.

However -- and this is critical -- the executive order does not automatically preempt existing state laws. State AI regulations remain in full legal effect until either Congress passes preemptive legislation or courts rule otherwise. The executive order signals intent, not immediate legal change.

What Federal Agencies Are Doing

In the absence of comprehensive legislation, federal agencies continue to enforce existing laws as they apply to AI:

- FTC: Pursuing enforcement actions against deceptive AI practices under Section 5 of the FTC Act

- EEOC: Applying Title VII to AI-assisted employment decisions

- SEC: Scrutinizing AI-related claims in securities filings

- FDA: Regulating AI/ML-based medical devices through existing frameworks

- NIST: Publishing voluntary AI risk management guidelines (AI RMF)

Key State Laws Taking Effect in 2026

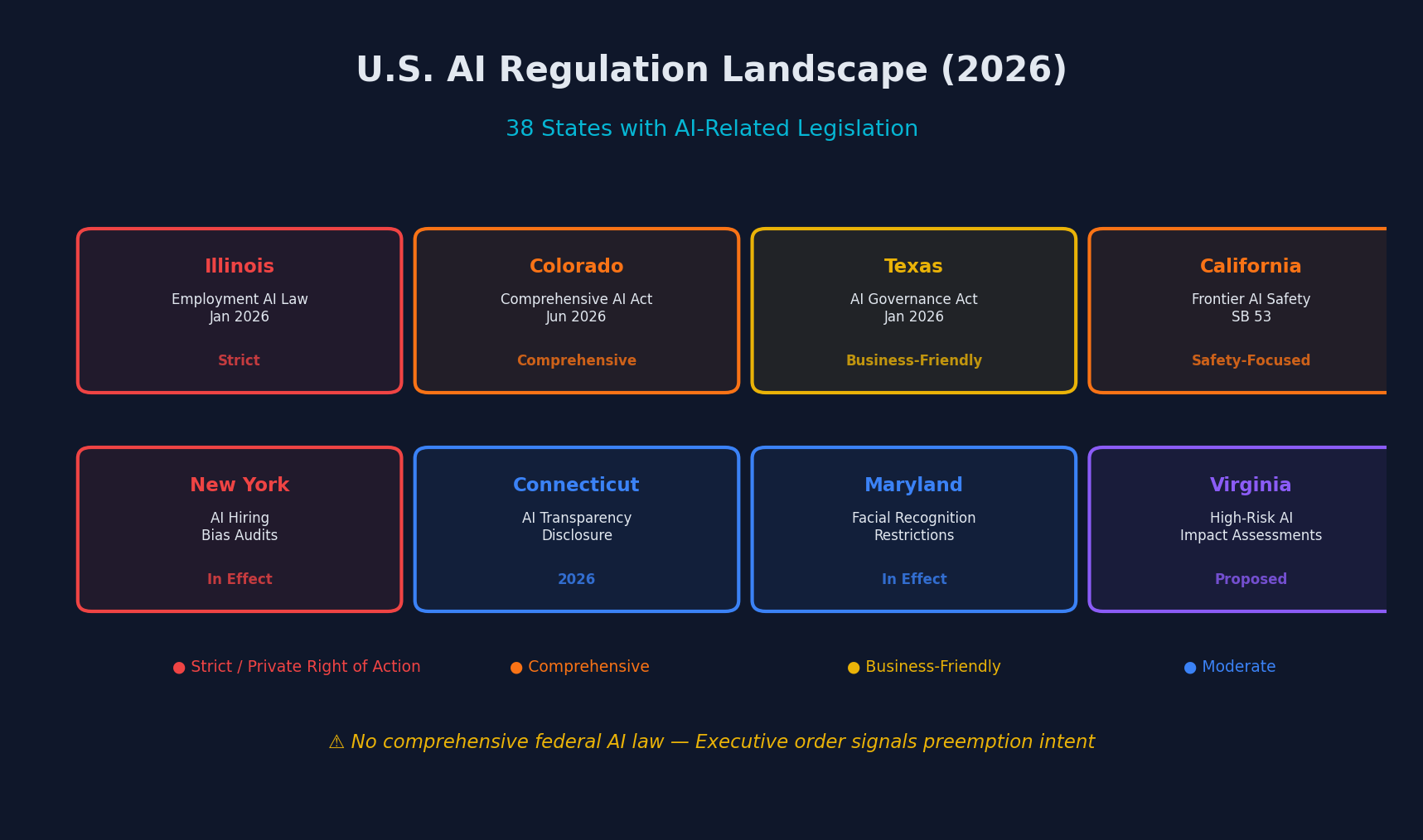

The 2026 AI regulation patchwork: 38 states with AI legislation, each with different requirements

The 2026 AI regulation patchwork: 38 states with AI legislation, each with different requirements

Here is where the regulatory rubber meets the road for developers. Three states have passed particularly significant AI laws that took effect (or will take effect) in 2026.

Illinois: AI in Employment (H.B. 3773)

Effective: January 1, 2026

Illinois amended its Human Rights Act to explicitly address AI in employment decisions. Key requirements:

- Scope: Triggered when AI is used to make decisions on hiring, firing, discipline, tenure, and training

- Disclosure: Companies must notify workers when AI is integrated into workplace decisions

- Private right of action: Individuals can sue employers who violate the law

- Discrimination standard: The law treats AI-driven discrimination the same as human discrimination

Developer Impact: If you build or deploy AI tools used in HR, recruiting, or workforce management, your systems must include clear disclosure mechanisms and audit trails.

# Example: Logging AI-assisted employment decisions for compliance

import logging

from datetime import datetime

class AIDecisionLogger:

"""

Compliance logger for AI-assisted employment decisions.

Required under Illinois H.B. 3773 and similar state laws.

"""

def __init__(self, system_name: str):

self.system_name = system_name

self.logger = logging.getLogger(f"ai_compliance.{system_name}")

def log_decision(

self,

decision_type: str, # "hiring", "promotion", "termination", etc.

model_version: str,

input_features: list, # Features used (NOT the values)

decision: str,

confidence: float,

human_reviewer: str = None

):

record = {

"timestamp": datetime.utcnow().isoformat(),

"system": self.system_name,

"decision_type": decision_type,

"model_version": model_version,

"features_used": input_features,

"decision": decision,

"confidence_score": confidence,

"human_review": human_reviewer is not None,

"reviewer": human_reviewer

}

self.logger.info(f"AI_DECISION: {record}")

return recordTexas: Responsible AI Governance Act

Effective: January 1, 2026

Texas took a notably business-friendly approach to AI regulation:

- Sandbox program: Establishes a program for testing AI systems with reduced regulatory risk

- State council: Creates a governance body to oversee compliance and support innovation

- No private right of action: Only the state can enforce the law, not individuals

- Higher bar for discrimination claims: A showing of disparate impact alone is not sufficient to demonstrate discriminatory intent

Developer Impact: Texas is more permissive than Illinois, but still requires reasonable care in AI deployment. The sandbox program is particularly interesting for developers building experimental AI systems, as it provides a structured environment for testing with reduced legal risk.

Colorado: AI Act (S.B. 24-205)

Effective: June 2026 (postponed from February 2026)

Colorado's law is the most comprehensive state AI regulation in the country, specifically targeting "high-risk AI systems":

- Impact assessments: Required for any high-risk AI system before deployment

- Algorithmic discrimination prevention: Developers and deployers must exercise reasonable care to prevent discriminatory outcomes

- Consumer disclosure: Public statements required about what AI systems are in use and how they manage discrimination risk

- Appeal rights: Individuals must have the opportunity to appeal AI-driven decisions

- Attorney General enforcement: No private right of action; the state AG has exclusive enforcement authority

Developer Impact: This is the most demanding law for developers. If your AI system makes "consequential decisions" (employment, credit, housing, insurance, education), you need documented impact assessments, bias testing procedures, and consumer-facing disclosure mechanisms.

Other States to Watch

| State | Focus Area | Key Requirement | Status |

|---|---|---|---|

| California (SB 53) | Frontier AI Safety | Risk frameworks, safety incident reporting, whistleblower protections | Signed Sept 2025 |

| New York | AI in hiring | Bias audits for automated employment decision tools | In effect |

| Maryland | Facial recognition | Restrictions on AI-powered facial recognition by employers | In effect |

| Connecticut | AI transparency | Disclosure requirements for AI-generated content | 2026 |

| Virginia | High-risk AI | Impact assessments for high-risk AI systems | Proposed |

The EU AI Act: The Transatlantic Factor

If your AI system serves users in the European Union, the EU AI Act adds another layer of compliance. Fully in force since 2025, it takes a risk-based approach:

- Unacceptable risk: Banned (social scoring, real-time biometric surveillance)

- High risk: Strict requirements (conformity assessments, transparency, human oversight)

- Limited risk: Transparency obligations (chatbots must disclose they are AI)

- Minimal risk: No specific obligations

Comparing U.S. and EU Approaches

| Dimension | United States (2026) | EU AI Act |

|---|---|---|

| Structure | Patchwork of state laws | Single comprehensive regulation |

| Approach | Sector and use-case specific | Risk-based classification |

| Enforcement | Varies by state/agency | Centralized + national authorities |

| Private right of action | Some states (Illinois) | Yes, through member state courts |

| Penalties | Varies | Up to 7% of global annual revenue |

| Pre-market requirements | Generally none | Conformity assessments for high-risk |

Practical Compliance Guide for Developers

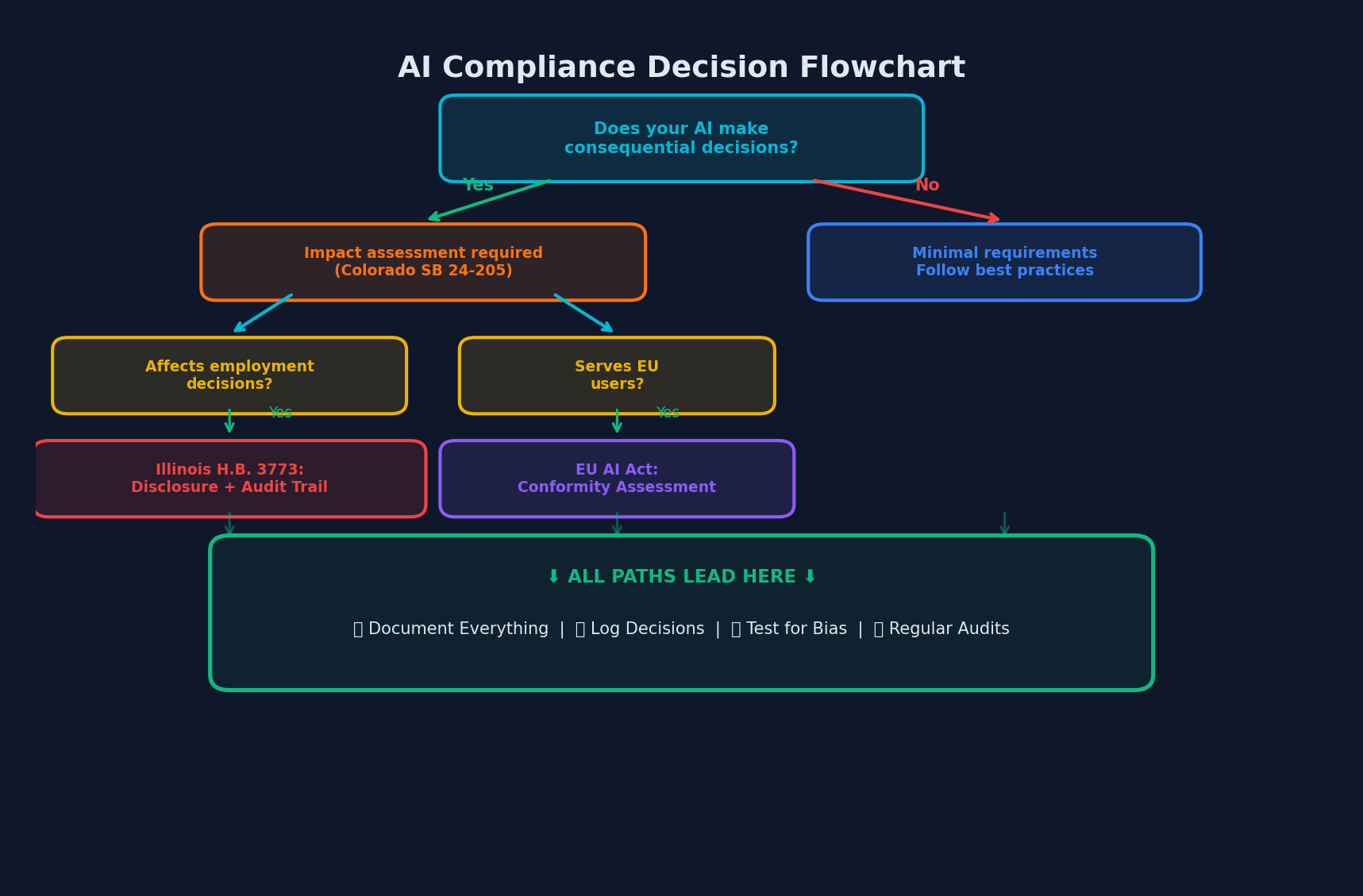

Compliance decision flowchart: all paths lead to documentation, logging, bias testing, and audits

Compliance decision flowchart: all paths lead to documentation, logging, bias testing, and audits

Given this fragmented landscape, here is a practical framework for building AI systems that can withstand regulatory scrutiny across jurisdictions.

1. Implement Comprehensive Logging

Every AI-assisted decision should generate an auditable log entry. This is required by multiple state laws and is simply good engineering practice.

# Minimum viable compliance logging schema

DECISION_LOG_SCHEMA = {

"timestamp": "ISO 8601",

"system_id": "Unique identifier for the AI system",

"model_version": "Specific model version used",

"decision_type": "Category of decision (hiring, credit, etc.)",

"input_features": "List of feature names (not values) used",

"output": "The decision or recommendation made",

"confidence": "Model confidence score",

"explanation": "Human-readable explanation of the decision",

"human_in_loop": "Whether a human reviewed the decision",

"jurisdiction": "Legal jurisdiction of the affected individual",

"data_retention_expiry": "When this log entry can be deleted"

}2. Build Bias Testing Into Your CI/CD Pipeline

Do not treat bias testing as a one-time audit. Automate it.

# Example: GitHub Actions workflow for bias testing

name: AI Bias Audit

on:

push:

paths:

- 'models/**'

- 'training/**'

schedule:

- cron: '0 0 1 * *' # Monthly

jobs:

bias-audit:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Run fairness metrics

run: |

python -m pytest tests/fairness/ \

--demographic-parity-threshold=0.05 \

--equalized-odds-threshold=0.05 \

--report-format=json \

--output=bias_report.json

- name: Check thresholds

run: python scripts/check_bias_thresholds.py bias_report.json

- name: Archive compliance report

uses: actions/upload-artifact@v4

with:

name: bias-audit-${{ github.sha }}

path: bias_report.json

retention-days: 2555 # ~7 years for compliance3. Design for Disclosure

Multiple laws require that users know when AI is involved in decisions that affect them. Build this into your UX from the start.

Key disclosure requirements across jurisdictions:

- What: What AI system was used

- How: How the AI influenced the decision

- Why: What factors were considered

- Recourse: How to appeal or request human review

4. Implement Impact Assessments

Colorado's law is the clearest on this requirement, but impact assessments are quickly becoming a baseline expectation. Your assessment should document:

- The purpose and intended use of the AI system

- Data sources and training methodology

- Known limitations and failure modes

- Bias testing results across protected categories

- Risk mitigation measures

- Ongoing monitoring procedures

5. Adopt a "Highest Common Denominator" Strategy

Rather than building separate compliance pathways for each state, design your systems to meet the strictest applicable standard (currently Colorado). If your system complies with Colorado's requirements, it will likely satisfy other state laws as well.

What Happens Next

The regulatory landscape will continue to evolve rapidly throughout 2026. Key events to watch:

- Colorado AI Act enforcement begins (June 2026): This will be the first real test of comprehensive state AI regulation.

- Federal preemption litigation: Expect legal challenges to the executive order's state preemption provisions.

- Congressional activity: Multiple federal AI bills are in various stages of development, though none are expected to pass before the 2026 midterms.

- EU AI Act enforcement actions: The first enforcement actions under the EU AI Act will set important precedents.

The Bottom Line for Developers

The regulatory landscape for AI in 2026 is undeniably complex, but it is not unmanageable. The developers and organizations that will thrive are those who:

- Treat compliance as a feature, not a burden. Good logging, bias testing, and transparency make your AI systems better, not just more compliant.

- Build for the strictest standard. Design once for Colorado/EU compliance, deploy everywhere.

- Stay informed. The landscape is changing monthly. Designate someone on your team to track regulatory developments.

- Document everything. When regulators come knocking, documentation is your best defense.

The patchwork of state laws and the tension with federal preemption will eventually resolve, likely through either comprehensive federal legislation or judicial decisions. Until then, building robust, transparent, well-documented AI systems is both the safest legal strategy and the best engineering practice.

Comments